Overview & Topics

Mainstream computer vision research frequently overlooks factors like on-device latency, resource use, and power consumption. This year's ECV workshop addresses true efficiency in the era of Foundation Models and Embodied AI.

Efficient and Scalable Perception Systems

- Foundation model efficiency, latency-aware training, and tunable accuracy-efficiency tradeoffs

- Scalable architectures (e.g., mixture-of-experts, vision transformers)

- Efficient representation learning for images, multimodal, 3D, and 4D data

- Optimization for edge deployment and low-power hardware

- Annotation-efficient pipelines (simulation, augmentation, active selection)

Generative and Foundation Models on Edge Devices

- Generative AI for language models, parallel decoding, long-context and KV-cache management

- Multimodal generative models, VQA and fusion for text, vision, audio, and sensor data

- Diffusion models, step, adversarial, knowledge distillation, consistency models

- Vision-Language-Action models and interactive environments

3D Scene Understanding and Reconstruction in AR/VR

- 3D from multi-view, sensors, or single images

- Real-time 3D perception, mapping, reconstruction, and rendering

- Compositional and open-vocabulary 3D scene understanding

- Robust segmentation and affordance prediction in complex scenes

- Efficient 3D/4D representation learning and compression (point cloud, 6DoF)

- Multi-view and multi-temporal consistency in 3D/4D generation

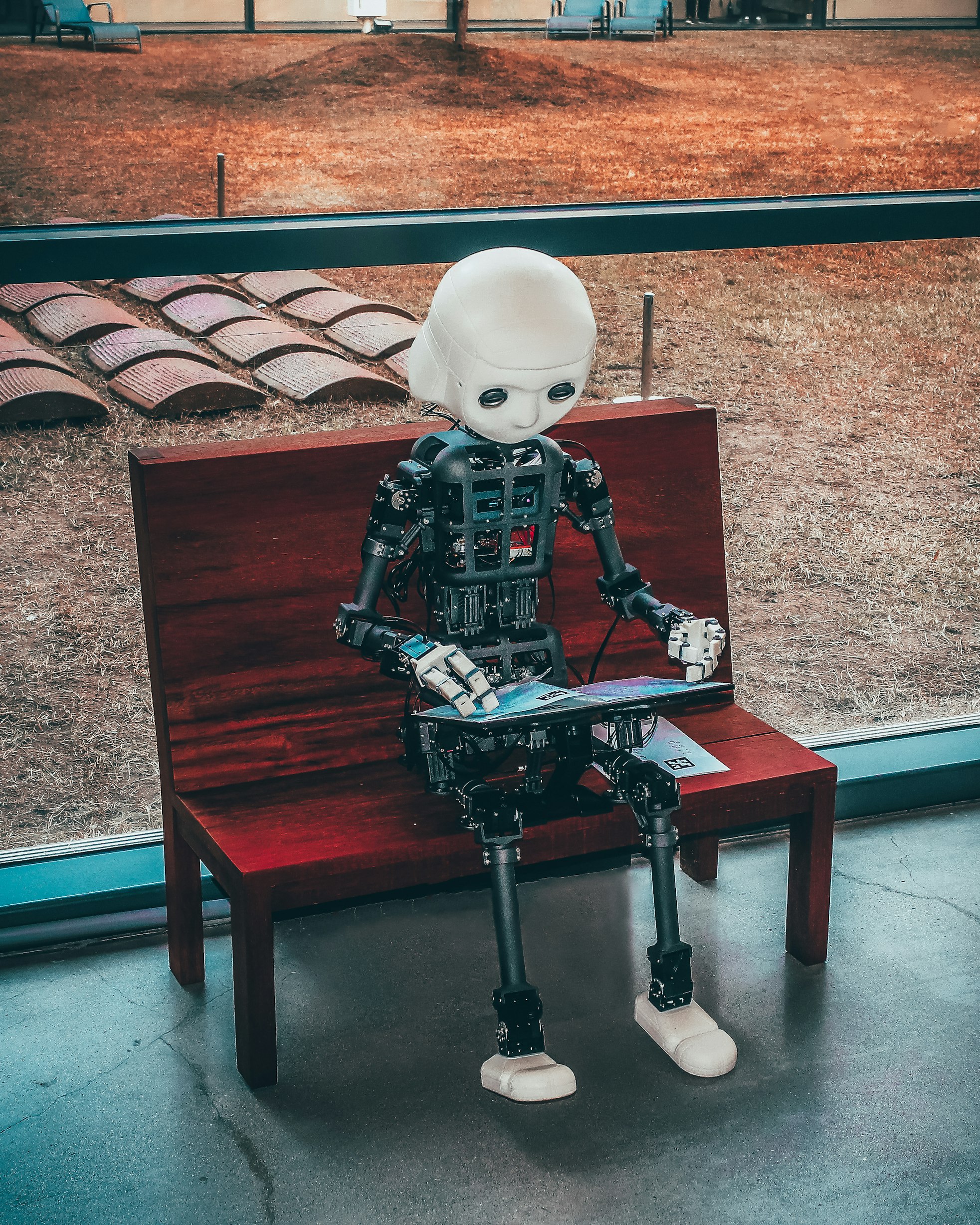

Embodied AI and Interactive Agents

- Robotics and embodied vision: active agents, simulation, causal/object-centric representations

- Dynamic modeling of environments and objects for manipulation and navigation (VLA)

- Few-shot and adaptive learning for Vision-Language Agents (VLA) in open environments

- Closed-loop policy learning for task completion in cluttered environments

- Integration of human cues (gaze, pose) into perception and decision-making

- Continual learning, adaptive memory, and long-horizon reasoning

- Evaluation frameworks for perception, action, and memory in embodied systems

Avatars, Neural Rendering, and Personalization

- Expressive avatar synthesis and display for HRI (human-robot interaction)

- Neural rendering for dynamic humans and avatars

- Personalization from limited data (few snapshots, generalizable enrollment)

- Relighting, appearance adaptation, and editing under varied conditions

- Consistency and realism across environments and devices

- Efficient streaming and transmission of animated avatars

Egocentric and Social Perception

- Egocentric hand/body tracking and human-object interaction

- Emotion-aware communication and feedback in interactive systems

- Strategies for social presence and trust in companion robots

- Methods for continual adaptation and personalization in interactive systems

Potential Impacts

Efficient computer vision on mobile, wearable, and robotics devices unlocks transformative possibilities across industries.

-

Healthcare & Fitness

- Remote Monitoring: Wearable devices monitoring vital signs and physical activities.

- Early Diagnosis: Enhanced image processing for detection of skin conditions, eye health, etc.

-

Industrial Applications

- Quality Control: Automated product inspection for manufacturing.

- Maintenance and Repair: Wearable devices guiding technicians through complex repairs.

-

Accessibility

- Assistance for the Visually Impaired: Identifying objects and reading text aloud.

- Gesture Recognition: Enabling touchless interaction for users with physical disabilities.

-

Environmental Monitoring

- Wildlife Tracking: Monitoring animal movements and detecting activities via edge devices.

-

Robotics

- Extended Operational Time: Reduced energy consumption enabling longer battery life.

- Real-Time Processing: Faster visual processing for responsive actions.

- Increased Mobility: Compact processing units allow for smaller robots.

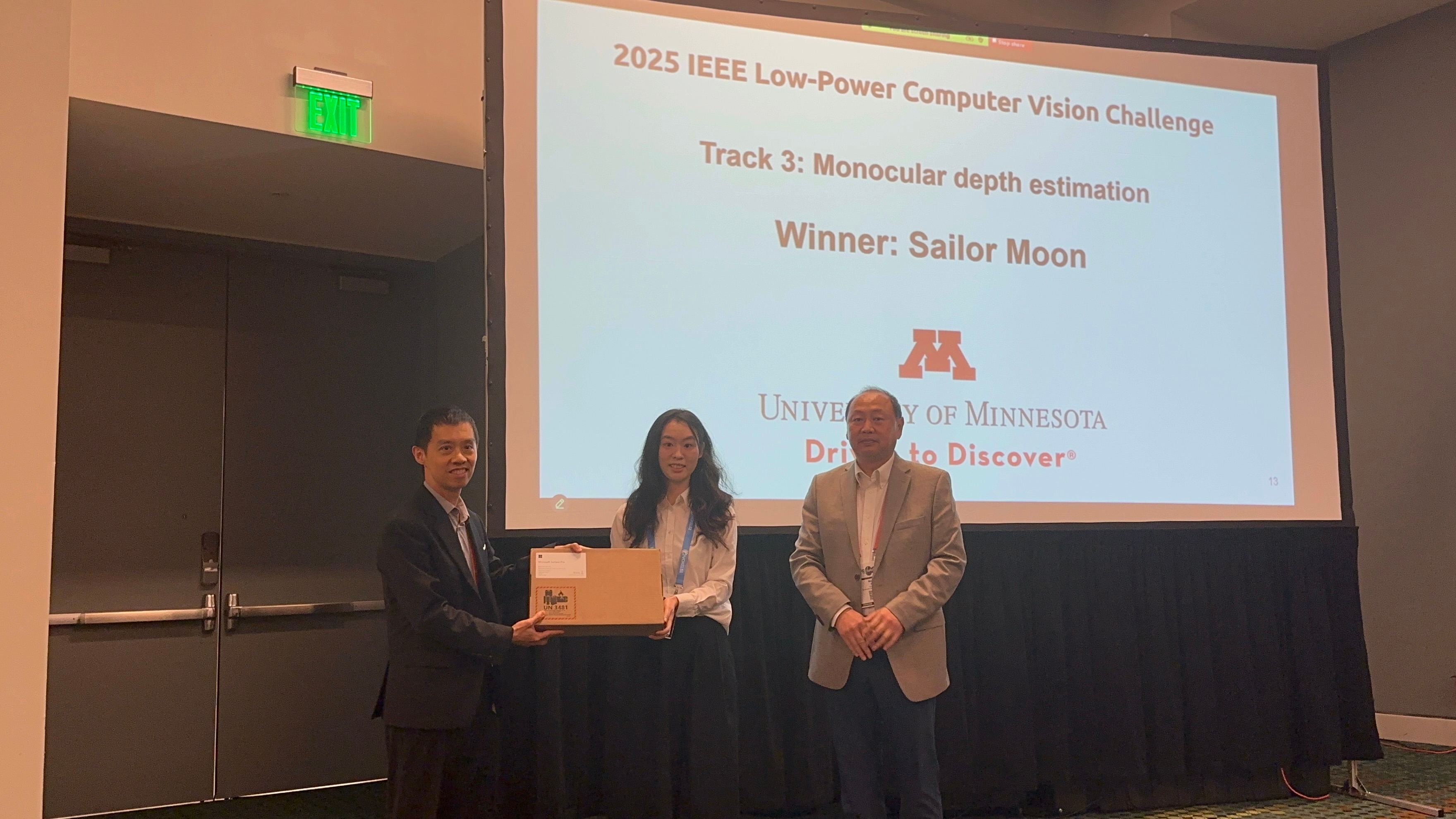

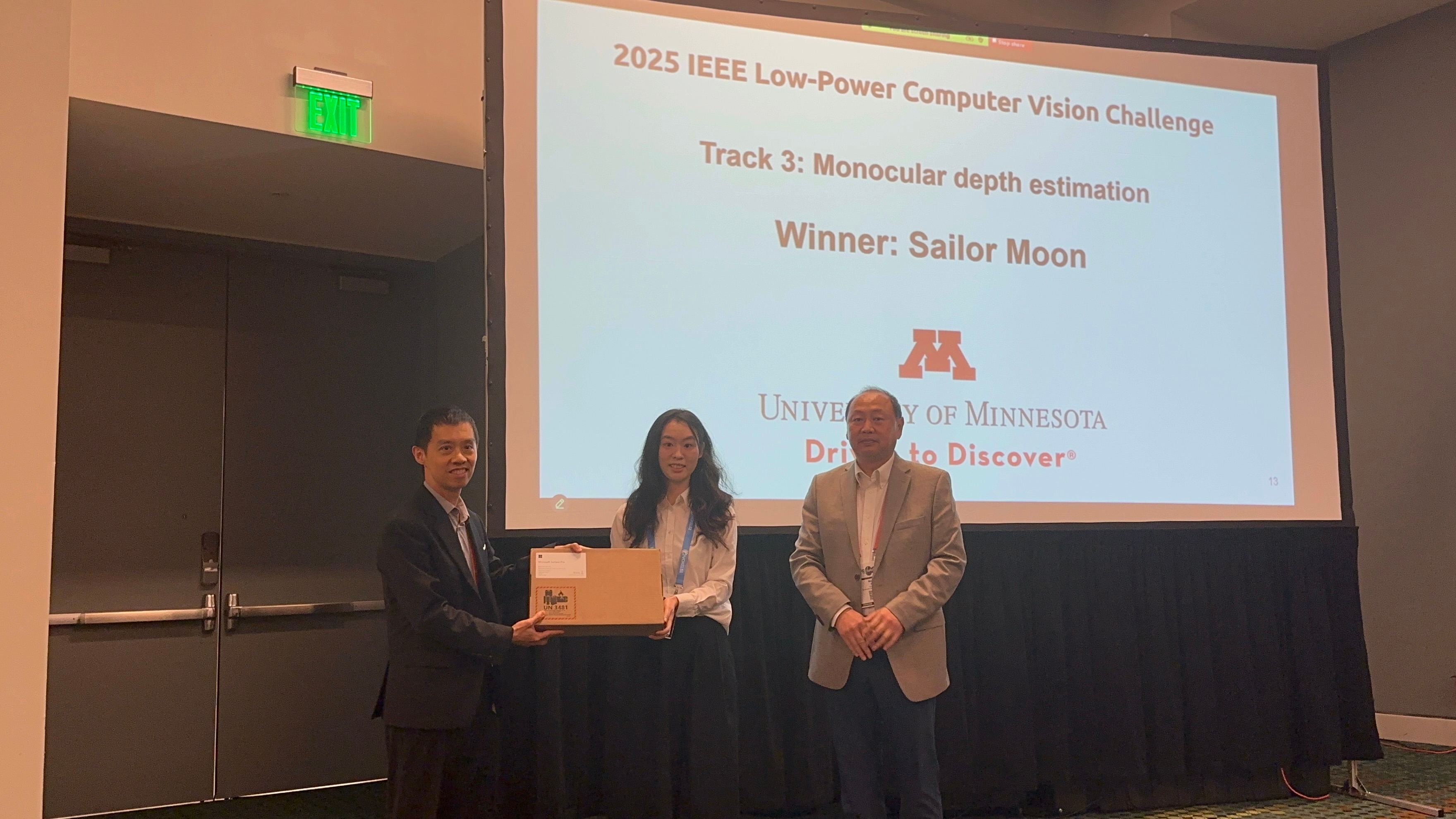

Highlights from the 8th ECV Workshop (CVPR 2025)